The future of data platforms is modular, not monolithic.

For years, companies have built huge centralized “cathedrals” for their data, but these systems, which are rigid and slow to update, struggle to keep pace with the speed of business.

Agility and scalability today require a different approach: no longer a single block, but an ecosystem of specialized and reusable components.

This is the heart of Composable Data & Analytics (D&A): An architectural paradigm that abandons rigidity for a flexible model, allowing companies to “compose” tailor-made solutions by freely combining analytical engines, integration tools, and applications.

In this new scenario, with the rise of AI, it also becomes essential to standardize the dialogue between models.

This is where the Model Context Protocol (MCP) comes into play, providing a common language to ensure interoperability and governance between the various intelligent components of the platform.

From Projects to Products

At the heart of the Composable Data & Analytics paradigm lies a profound conceptual shift: abandoning the traditional “project” orientation in favor of a “product” orientation, inspired by the principles of data mesh and data product thinking.

In fact, Composable D&A maintains points of contact with data mesh and data product thinking, but focuses on architectural flexibility and the modular composition of analytical solutions.

Unlike data mesh, the implementation of Composable D&A does not involve the restructuring of organizational processes, but merely provides a modular framework for data integration and orchestration.

| Aspect | Data Mesh | Data Product Thinking | Composable D&A |

|

Focus |

Organization & governance |

Product approach to data |

Modular architecture and technology |

|

Domain |

Autonomous domain teams |

User experience and value |

Technological flexibility and integration |

|

Technology |

Not specified |

Not specified |

Client-centric (API, microservices, SaaS) |

|

Ultimate goal |

Scalability and distributed ownership |

Data quality and value |

Speed, composition, and adaptability |

In this vision, each information domain is encapsulated in a data product, i.e., an autonomous entity that exposes reliable, usable, and governed data with clear accountability.

A data product is no longer a simple table or view to be queried, but a digital artifact with its own identity, defined ownership (typically by a data product owner), and an explicit promise of value for downstream consumers.

In the initial phase, data product owners are generally represented by members of the historical BI team, i.e., those who previously managed the data warehouse or its functional verticals, ensuring continuity in data management and domain knowledge.

From a technical point of view, a data product consists of:

- Certified and versioned data, produced according to criteria of quality, traceability, and reliability (e.g., through data lineage and data contracts)

- Explicit transformation logic, incorporated through business rules or semantic calculations (e.g., derived metrics, aggregation logic, normalizations)

- KPI indicators and semantic layers, to facilitate interoperability with BI tools or analytical models

- Metadata and technical/functional documentation, useful for both discovery and consumer onboarding

- Standardized exposure mechanisms (e.g., RESTful APIs, GraphQL, SQL queries on data lakehouses, semantic catalogs, etc.)

A Platform to Support Products

To make all this sustainable, you need a solid, automated platform.

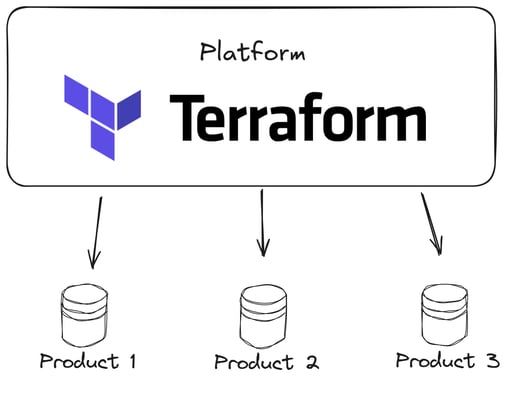

This is where the composable data platform comes in, a technological foundation that allows you to create, orchestrate, and monitor data products in a standardized way.

With tools such as Terraform (or IaC tools in general), it is possible to activate the necessary infrastructure for a new data product in just a few minutes, including pipelines, accesses, Git repositories, and orchestration logic.

Terraform plays a crucial role in a modular platform, allowing you to automate the creation of a data product and thus shorten the time to market for the data.

Furthermore, through appropriate permission management, it allows you to secure access to the data by granting only a minimum subset of permissions necessary to access the platform.

Automation, Quality, and Collaboration

One of the pillars of the Composable D&A model is the ability to automate the entire data lifecycle, from initial loading to publication and monitoring.

This is possible thanks to integration with orchestration, code versioning, and quality control tools that can be implemented on any cloud or on-premises infrastructure, provided they support an Infrastructure-as-Code (IaC) and CI/CD model.

Data pipelines, for example, can be defined in standard languages such as SQL, Python, or YAML and managed through versioning systems (such as Git), enabling automated testing, quality control, and simplified deployment.

To ensure data quality, frameworks are adopted that can:

- Define quality rules (consistency, accuracy, etc.)

- Perform automated testing on tables, transformations, or models

- Generate clear and navigable data lineage

- Trigger alerts in case of errors or regressions

At the same time, the platform promotes collaboration between technical and business teams through shared interfaces, automated documentation, and self-service analysis tools. The goal is to reduce the distance between those who build data products and those who use them daily to make decisions.

The advantage of this approach is that it does not depend on a specific cloud vendor: The same logic can be replicated on AWS, Azure, GCP, or even on hybrid or on-premise environments using open-source or commercial tools. This ensures maximum architectural flexibility and reduces technological lock-in.

Democratization of Analysis

The true value of data is only realized when it becomes accessible to decision makers. The Composable model encourages the use of tools that facilitate interaction with data, even by non-technical users.

This can include:

- Self-service dashboards and intuitive visual interfaces

- Central semantic models to ensure consistency between KPIs

- AI assistants and conversational interfaces that respond to questions in natural language

All of this is independent of the cloud provider. Whether you use AWS, Azure, GCP, or a private data center, the important thing is that the tools are modular, standardized, and integrable.

In summary, the goal is not to choose the “best” cloud provider, but to build a resilient and flexible data analytics ecosystem, where the real value lies in the ability to put the right data, in the right format, in the hands of those who can transform it into strategic decisions, regardless of the technology that hosts it.

MCP and Composable D&A

MCP is an open-source protocol for standardizing how artificial intelligence models (LLMs and AI agents) connect to external tools, data sources, and APIs.

It allows for a structured (machine-readable) description of available resources (tools, functions, data), with metadata on input/output, permissions, transport modes (e.g., HTTP, stdin/stdout, WebSocket, etc.).

MCP interacts very well with the Composable Data & Analytics paradigm. Their integration allows us to start talking about an Agentic Composable Data platform that leverages the power of automation and AI to optimize classic processes implemented on a given platform.

In an advanced version, MCP could become a product requirement. This approach would allow AI agents to use data even in the exploratory phase on all available products, even if these were actually designed for reporting purposes or to provide datasets for training AI models.

Below are some of the areas of contact between Composable D&A and MCP.

|

Composable D&A Area |

Role of the MCP |

|

Modularity & product thinking |

MCP promotes the clear definition of AI/data “products”: tools exposed as “data products/tool products” with standard interfaces. |

|

Dynamic access to data |

It allows AI agents to directly query data products exposed through the MCP server, reducing the need for custom integrations for each model. |

|

Automation & reuse |

An MCP server can be used by multiple models/agents; the tool defined once is reusable; standardized deployment. |

|

Democratization/self-service |

Business users or analysts can “ask” an AI agent for functions based on the data products available to them, via MCP, even if they do not know how to build complex pipelines. |

|

Governance/security

|

MCP introduces a contract and metadata layer, which can be integrated into data product quality and security frameworks, enabling auditing, permissions, and traceability. |

Points to Note

Like any powerful tool, it must be used with care, especially in today's world where data security is increasingly critical.

- Security: MCP can expose attack surfaces (e.g., malicious tool provisioning, unauthorized access) if not properly configured

- Control of exposed tools: What permissions do you give to the MCP server? What can the model do? Solid governance is needed

- Maintenance and versioning: External tools change, APIs evolve, data schemas change; a system is needed to manage versions, compatibility, and deprecations

- Dependence on AI agents and model limitations: Even with MCP, the AI model must be sufficiently “intelligent” to understand the contract and use the tools correctly

- Performance and latency: If various exposed tools require external calls, bottlenecks can arise if the MCP server is not well positioned or optimized

Conclusion

The Composable Data & Analytics approach has already revolutionized the way we design data platforms: A modular, automated, and governed ecosystem, where each data product is independent but integrated into a consistent and scalable architecture.

With the arrival of the Model Context Protocol (MCP), this paradigm can take a step further: Not only available and governed data, but intelligent data accessible via standardized AI agents, able to communicate directly with data products and exploit their capabilities without custom development or complex integrations.

Together, Composable D&A and MCP pave the way for data platforms where the modularity of composable meets the flexibility of AI agents, data product governance meets access standardization via MCP, and pipeline automation integrates with the cognitive capabilities of AI models.

The result, when developed following best practices, is a data ecosystem that is not only composable and cloud-agnostic, but also intelligent, adaptive, and future-ready, where every new tool or data product can be connected and used with the same ease with which we add a new piece to a pre-designed puzzle today.

Ready to evolve your data architecture? Contact us to find out how our expertise can help you implement a Composable D&A and MCP strategy tailored to your organization.